How to use Docker-Compose for Ollama

I had a hard time finding straight-forward guides on getting Ollama set up with Docker-Compose — so this is how it’s done.

Overview

What you’ll get

How to deploy Ollama with Docker-Compose

Nvidia GPU pass-through for Docker-Compose (tested on Linux)

Open-WebUI (or any front-end) can be used with this Ollama setup

Contents

Docker-Compose.yml file for Ollama

How to enable GPU pass-through in Docker-Compose for Ollama

How to test Ollama after deploying with Docker-Compose

This guide assumes that you already have Docker and Docker-Compose installed, and both are up-to-date.

1. Docker-Compose.yml file for Ollama

services:

ollama:

image: ollama/ollama:latest

ports:

- "11434:11434"

healthcheck:

test: ollama --version || exit 1

command: serve

volumes:

- <local_directory>:/root/.ollama

gpus:

- driver: nvidia

count: allNotes about the Docker-Compose file for Ollama:

11434/TCP is the default port for Ollama

Update <local_directory> with whatever path you want Ollama docker files to live at. Note that Models you download with Ollama live here.

2. Enable GPU pass-through in Docker-Compose for Ollama

To use a Nvidia GPU in Docker, you need to: (1) Install the Nvidia Container Toolkit, then; (2) Test the GPU in Docker

Install the Nvidia Container Toolkit

Installation Instructions: Nvidia’s official instructions are here

This is what it sounds like - a tool to make Nvidia GPU’s work in Docker. TL;DR: This is 2-3 commands; first you enable the Nvidia repo, then you install the toolkit

Test your GPU in Docker

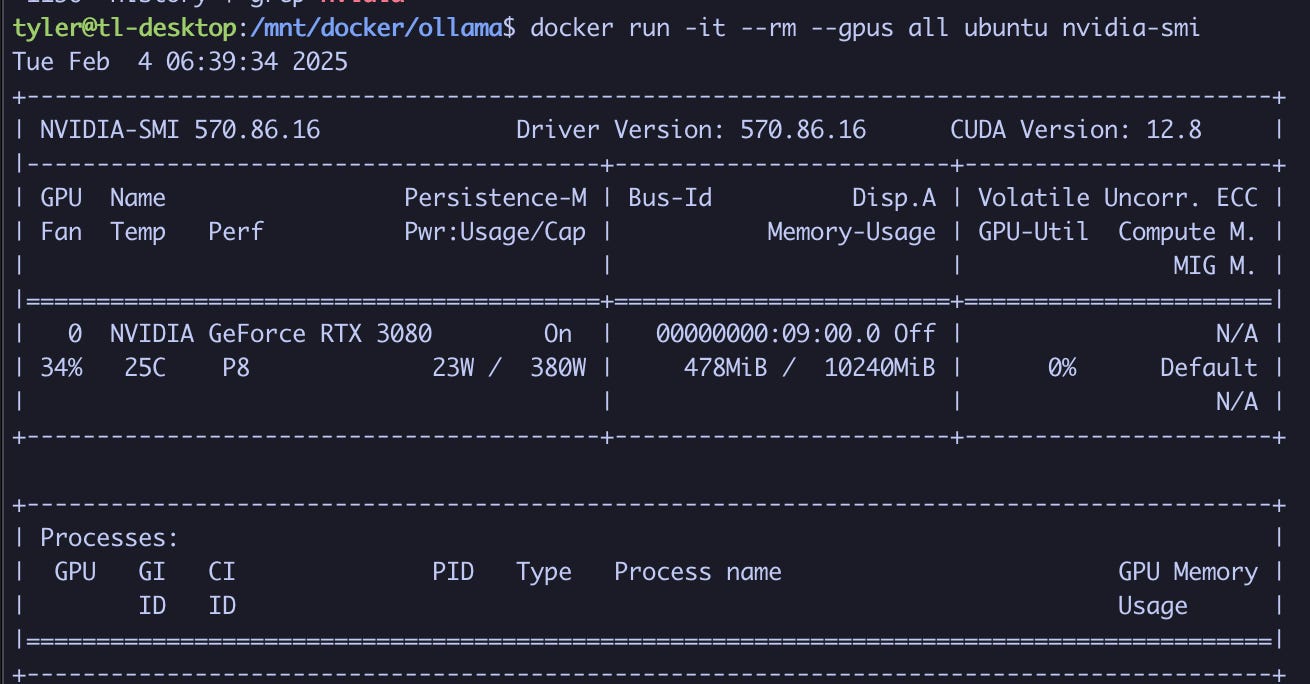

Now make sure that Docker recognies your GPU with this command (this works on Linux flavors other than Ubuntu):

docker run -it --rm --gpus all ubuntu nvidia-smiYour output should look something like this:

3. Launch Ollama with Docker-Compose and Test

Launch Ollama with Docker-Compose

Now that the Nvidia Container Toolkit was installed and your docker-compose.yml file is ready, you can test it out. Go into the directory where your docker-compose.yml file lives, and:

docker-compose up -dVerify Ollama is running in Docker

To verify that Ollama is running:

docker ps Your output (after a minute) should show STATUS as healthy, and you should see your port bound (e.g.: 0.0.0.0:11434->11434/tcp)

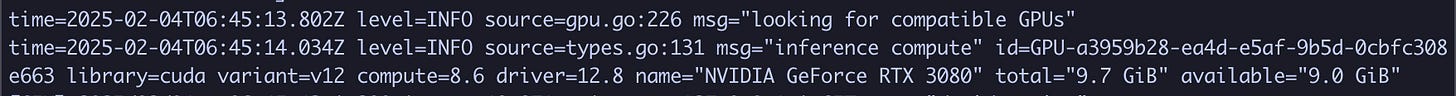

To verify that Ollama recognizes the Nvidia GPU

To verify that the GPU is recognized within the Ollama Docker container:

docker logs ollamaYou should see a couple lines related to GPU — one where Ollama is searching for a compatible GPU, and another where it is recognized. Something like this:

Conclusion

That’s it! I’ll create a separate guide for actually using Ollama in Docker, and how to implement a front-end for it like Open-WebUI